C++双线程调用网络摄像头与多线程调用多摄像头同步执行方法详细讲解

zk_ken 人气:0在调用网络摄像头处理自己的算法时,当解码的速度与算法运行的速度差太多时,会出现类似下面的错误

error while decoding MB 148 4, bytestream

所以需要使用两个线程,一个线程调用摄像头,一个线程用来处理图像。

一、使用双线程调用网络摄像头并执行算法

方法一

#include <iostream>

#include <thread>

#include <mutex>

#include <atomic>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

static std::mutex mutex;

static std::atomic_bool isOpen;

static void cameraThreadFunc(std::string camId, cv::Mat* pFrame)

{

cv::VideoCapture capture(camId);

//capture.set(cv::CAP_PROP_FOURCC, CV_FOURCC('M', 'J', 'P', 'G'));

capture.set(cv::CAP_PROP_FPS, 30);

if (!capture.isOpened()) {

isOpen = false;

std::cout << "Failed to open camera with index " << camId << std::endl;

}

cv::Mat frame;

while (isOpen) {

capture >> frame;

if (mutex.try_lock()) {

frame.copyTo(*pFrame);

mutex.unlock();

}

cv::waitKey(5);

}

capture.release();

}

int main(int argc, char* argv[])

{

std::string rtsps = "rtsp://admin:bs123456@192.168.1.64:554/h264/ch1/main/av_stream/1";

isOpen = true;

cv::Mat frame(1440, 2560, CV_8UC3);

//cv::Mat* frame = new cv::Mat(1440, 2560, CV_8UC3);

std::thread thread(cameraThreadFunc, rtsps, &frame); //调用摄像头

//std::thread thread(cameraThreadFunc, rtsps, frame);

//*****************************************************

//调用自己的算法处理图像

void* p_algorithm;

p_algorithm = (void*)(new WindNetDetect());

std::string net_bins = "./models/visdrone_1009-opt.bin";

std::string net_paras = "./models/visdrone_1009-opt.param";

int init_res = ((WindNetDetect*)p_algorithm)->init1(net_bins, net_paras);

WindNetDetect* tmp = (WindNetDetect*)(p_algorithm);

std::vector<Object> objects;

//*****************************************************

while (isOpen) {

//*********************************

//调用目标检测的算法

tmp->detect(frame, objects);

tmp->draw_objects(frame, objects);

//*********************************

if (cv::waitKey(1) == 'q') {

break;

}

}

frame.release();

isOpen = false;

thread.join();

return 0;

}方法二

// video_test.cpp

#include <iostream>

#include <string>

#include <thread>

#include <mutex>

#include <atomic>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

class VideoCaptureMT {

public:

VideoCaptureMT(int index, int height=480, int width=640);

VideoCaptureMT(std::string filePath, int height=480, int width=640);

~VideoCaptureMT();

bool isOpened() {

return m_IsOpen;

}

void release() {

m_IsOpen = false;

}

bool read(cv::Mat& frame);

private:

void captureInit(int index, std::string filePath, int height, int width);

void captureFrame();

cv::VideoCapture* m_pCapture;

cv::Mat* m_pFrame;

std::mutex* m_pMutex;

std::thread* m_pThread;

std::atomic_bool m_IsOpen;

};

VideoCaptureMT::VideoCaptureMT(int index, int height, int width)

{

captureInit(index, std::string(), height, width);

}

VideoCaptureMT::VideoCaptureMT(std::string filePath, int height, int width)

{

captureInit(0, filePath, height, width);

}

VideoCaptureMT::~VideoCaptureMT()

{

m_IsOpen = false;

m_pThread->join();

if (m_pCapture->isOpened()) {

m_pCapture->release();

}

delete m_pThread;

delete m_pMutex;

delete m_pCapture;

delete m_pFrame;

}

void VideoCaptureMT::captureInit(int index, std::string filePath, int height, int width)

{

if (!filePath.empty()) {

m_pCapture = new cv::VideoCapture(filePath);

}

else {

m_pCapture = new cv::VideoCapture(index);

}

m_pCapture->set(cv::CAP_PROP_FRAME_WIDTH, width);

m_pCapture->set(cv::CAP_PROP_FRAME_HEIGHT, height);

m_pCapture->set(cv::CAP_PROP_FPS, 30);

m_IsOpen = true;

m_pFrame = new cv::Mat(height, width, CV_8UC3);

m_pMutex = new std::mutex();

m_pThread = new std::thread(&VideoCaptureMT::captureFrame, this);

}

void VideoCaptureMT::captureFrame()

{

cv::Mat frameBuff;

while (m_IsOpen) {

(*m_pCapture) >> frameBuff;

if (m_pMutex->try_lock()) {

frameBuff.copyTo(*m_pFrame);

m_pMutex->unlock();

}

cv::waitKey(5);

}

}

bool VideoCaptureMT::read(cv::Mat& frame)

{

if (m_pFrame->empty()) {

m_IsOpen = false;

}

else {

m_pMutex->lock();

m_pFrame->copyTo(frame);

m_pMutex->unlock();

}

return m_IsOpen;

}

int main(int argc, char* argv[])

{

VideoCaptureMT capture(0);

cv::Mat frame, gray;

while (capture.isOpened()) {

if (!capture.read(frame)) {

break;

}

cv::cvtColor(frame, gray, cv::COLOR_BGR2GRAY);

cv::blur(gray, gray, cv::Size(3, 3));

cv::Canny(gray, gray, 5 , 38 , 3);

cv::waitKey(100);

cv::imshow("image", gray);

if (cv::waitKey(5) == 'q') {

break;

}

}

capture.release();

return 0;

}二、使用多线程调用多路摄像头并同步执行多个算法

使用两个线程调用两个函数,get_cam1中包含了调用网络摄像头的类VideoCaptureMT (见上面代码),以及调用算法。另外一个函数是一样的。代码如下 :

void get_cam1()

{

clock_t start, end, end1, end2;

std::string rtsps = "rtsp://admin:bs123456@192.168.1.64:554/h264/ch1/main/av_stream/1";

VideoCaptureMT capture(rtsps);

//VideoCaptureMT capture, captureusb;

cv::namedWindow("外接摄像头检测", 0);

cv::Mat frame, gray;

while (capture.isOpened()) {

std::lock_guard<std::mutex> mtx_locker(mtx);

start = clock();

if (!capture.read(frame)) {

break;

}

//gray = frame;

end = clock();

std::vector<Object> objects;

tmp->detect(frame, objects);

for (size_t i = 0; i < objects.size(); i++)

{

const Object& obj = objects[i];

fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob,

obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);

cv::rectangle(frame, obj.rect, cv::Scalar(255, 0, 0));

char text[256];

//sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);

sprintf(text, "%s", class_names[obj.label]);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int x = obj.rect.x;

int y = obj.rect.y - label_size.height - baseLine;

if (y < 0)

y = 0;

if (x + label_size.width > frame.cols)

x = frame.cols - label_size.width;

cv::rectangle(frame, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),

cv::Scalar(255, 255, 255), -1);

cv::putText(frame, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));

}

//cv::imwrite("./result20.jpg", image);

cv::imshow("外接摄像头检测", frame);

end2 = clock();

float rumtime = (float)(end2 - start) / CLOCKS_PER_SEC;

std::stringstream buf;

buf.precision(3);//����Ĭ�Ͼ���

buf.setf(std::ios::fixed);//����С��λ

buf << rumtime;

std::string strtime;

strtime = buf.str();

std::cout << "strtime1111 = " << strtime << std::endl;

//start = end2;

if (cv::waitKey(5) == 'q') {

break;

}

}

capture.release();

}

void get_camusb()

{

clock_t start, end, end1, end2;

std::string rtsps = "rtsp://wowzaec2demo.streamlock.net/vod/mp4:BigBuckBunny_115k.mp4";

VideoCaptureMT capture(rtsps);

cv::Mat frameusb;

cv::namedWindow("外接摄像头检测1", 0);

//void* p_algorithmusb;

//p_algorithmusb = (void*)(new WindNetDetect());

//std::string net_binsusb = "./models/visdrone_1009-opt.bin";

//std::string net_parasusb = "./models/visdrone_1009-opt.param";

//int init_res = ((WindNetDetect*)p_algorithmusb)->init1(net_binsusb, net_parasusb);

//WindNetDetect* tmp = (WindNetDetect*)(p_algorithmusb);

while (capture.isOpened()) {

std::lock_guard<std::mutex> mtx_locker(mtx);

start = clock();

if (!capture.read(frameusb)) {

break;

}

//gray = frame;

end = clock();

std::vector<Object> objectsusb;

tmp->detect(frameusb, objectsusb);

for (size_t i = 0; i < objectsusb.size(); i++)

{

const Object& obj = objectsusb[i];

fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob,

obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);

cv::rectangle(frameusb, obj.rect, cv::Scalar(255, 0, 0));

char text[256];

//sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);

sprintf(text, "%s", class_names[obj.label]);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int x = obj.rect.x;

int y = obj.rect.y - label_size.height - baseLine;

if (y < 0)

y = 0;

if (x + label_size.width > frameusb.cols)

x = frameusb.cols - label_size.width;

cv::rectangle(frameusb, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),

cv::Scalar(255, 255, 255), -1);

cv::putText(frameusb, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));

}

//cv::imwrite("./result20.jpg", image);

cv::imshow("外接摄像头检测1", frameusb);

end2 = clock();

float rumtime = (float)(end2 - start) / CLOCKS_PER_SEC;

std::stringstream buf;

buf.precision(3);//����Ĭ�Ͼ���

buf.setf(std::ios::fixed);//����С��λ

buf << rumtime;

std::string strtime;

strtime = buf.str();

std::cout << "strtime1111 = " << strtime << std::endl;

//start = end2;

if (cv::waitKey(5) == 'q') {

break;

}

}

capture.release();

}

int main() {

void* p_algorithm;

p_algorithm = (void*)(new WindNetDetect());

std::string net_bins = "./models/visdrone_1009-opt.bin";

std::string net_paras = "./models/visdrone_1009-opt.param";

int init_res = ((WindNetDetect*)p_algorithm)->init1(net_bins, net_paras);

tmp = (WindNetDetect*)(p_algorithm);

//HANDLE hThread1 = CreateThread(NULL, 0, get_cam1, NULL, 0, NULL);

//CloseHandle(hThread1);

//HANDLE hThread2 = CreateThread(NULL, 0, get_camusb, NULL, 0, NULL);

//CloseHandle(hThread2);

std::thread thrd_1(get_cam1);

std::thread thrd_2(get_camusb);

thrd_1.join();

thrd_2.join();

while (true)

{

std::cout << "Main Thread Display!" << std::endl;

Sleep(3000);

}

return 0;

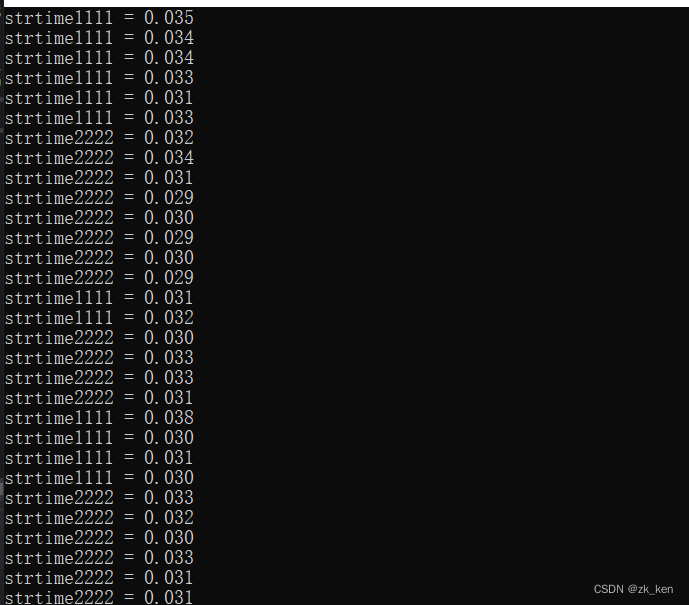

}运行上面的代码时,两个函数没有同步运行。如下strtime1111表示一个线程调用的函数,strtime2222表示另一个线程调用的函数,能看出没有同时调用两个函数。

所以需对代码进行如下改进:

static std::mutex mutexk;

void get_cam1()

{

clock_t start, end, end1, end2;

std::string rtsps = "rtsp://admin:bs123456@192.168.1.64:554/h264/ch1/main/av_stream/1";

VideoCaptureMT capture(rtsps);

cv::Mat frame;

cv::namedWindow("ss", 0);

while (capture.isOpened()) {

//pthread_rwlock_rdlock(&rwlock);

//std::lock_guard<std::mutex> mtx_locker(mtx);

start = clock();

if (!capture.read(frame)) {

break;

}

if (mutexk.try_lock()) {

//gray = frame;

end = clock();

std::vector<Object> objects;

tmp->detect(frame, objects);

//tmp->draw_objects(frame, objects);

for (size_t i = 0; i < objects.size(); i++)

{

const Object& obj = objects[i];

fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob,

obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);

cv::rectangle(frame, obj.rect, cv::Scalar(255, 0, 0));

char text[256];

//sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);

sprintf(text, "%s", class_names[obj.label]);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int x = obj.rect.x;

int y = obj.rect.y - label_size.height - baseLine;

if (y < 0)

y = 0;

if (x + label_size.width > frame.cols)

x = frame.cols - label_size.width;

cv::rectangle(frame, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),

cv::Scalar(255, 255, 255), -1);

cv::putText(frame, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));

}

//cv::imwrite("./result20.jpg", image);

cv::imshow("ss", frame);

end2 = clock();

float rumtime = (float)(end2 - start) / CLOCKS_PER_SEC;

std::stringstream buf;

buf.precision(3);//����Ĭ�Ͼ���

buf.setf(std::ios::fixed);//����С��λ

buf << rumtime;

std::string strtime;

strtime = buf.str();

std::cout << "strtime2222 = " << strtime << std::endl;

//start = end2;

if (cv::waitKey(5) == 'q') {

break;

}

mutexk.unlock();

}

}

capture.release();

}

void get_camusb()

{

//std::lock_guard<std::mutex> mtx_locker(mtx);

clock_t start, end, end1, end2;

//std::string rtsps = "rtsp://wowzaec2demo.streamlock.net/vod/mp4:BigBuckBunny_115k.mp4";

std::string rtsps = "rtsp://admin:bs123456@192.168.1.64:554/h264/ch1/main/av_stream/1";

VideoCaptureMT capture(rtsps);

cv::Mat frameusb;

cv::namedWindow("zz1", 0);

while (capture.isOpened()) {

std::lock_guard<std::mutex> mtx_locker1(mtx);

start = clock();

if (!capture.read(frameusb)) {

break;

}

if (mutexk.try_lock()) {

//gray = frame;

end = clock();

std::vector<Object> objectsusb;

tmp->detect(frameusb, objectsusb);

//tmp->draw_objects(frameusb, objectsusb);

for (size_t i = 0; i < objectsusb.size(); i++)

{

const Object& obj = objectsusb[i];

fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob,

obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);

cv::rectangle(frameusb, obj.rect, cv::Scalar(255, 0, 0));

char text[256];

//sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);

sprintf(text, "%s", class_names[obj.label]);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int x = obj.rect.x;

int y = obj.rect.y - label_size.height - baseLine;

if (y < 0)

y = 0;

if (x + label_size.width > frameusb.cols)

x = frameusb.cols - label_size.width;

cv::rectangle(frameusb, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),

cv::Scalar(255, 255, 255), -1);

cv::putText(frameusb, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));

}

//cv::imwrite("./result20.jpg", image);

cv::imshow("zz1", frameusb);

end2 = clock();

float rumtime = (float)(end2 - start) / CLOCKS_PER_SEC;

std::stringstream buf;

buf.precision(3);//����Ĭ�Ͼ���

buf.setf(std::ios::fixed);//����С��λ

buf << rumtime;

std::string strtime;

strtime = buf.str();

std::cout << "strtime1111 = " << strtime << std::endl;

//start = end2;

if (cv::waitKey(5) == 'q') {

break;

}

mutexk.unlock();

}

}

capture.release();

}

int main() {

void* p_algorithm;

p_algorithm = (void*)(new WindNetDetect());

std::string net_bins = "./models/visdrone_1009-opt.bin";

std::string net_paras = "./models/visdrone_1009-opt.param";

int init_res = ((WindNetDetect*)p_algorithm)->init1(net_bins, net_paras);

tmp = (WindNetDetect*)(p_algorithm);

std::thread thrd_1(get_cam1);

std::thread thrd_2(get_camusb);

thrd_1.join();

thrd_2.join();

while (true)

{

std::cout << "Main Thread Display!" << std::endl;

Sleep(3000);

}

return 0;

}使用多线线程调用多个函数同步执行模板如下:

#include <thread>

#include <mutex>

static std::mutex mutexk;

void func1() {

while (capture1.isOpened()) {

if (mutexk.try_lock()) {

//我的算法

my_func1();

mutexk.unlock();

}

}

}

void func2() {

while (capture2.isOpened()) {

if (mutexk.try_lock()) {

//我的算法

my_func2();

mutexk.unlock();

}

}

}

int main() {

std::thread thrd_1(func1);

std::thread thrd_2(func2);

thrd_1.join();

thrd_2.join();

return 0;

}在ubuntu中c++opencv多线程显示图片时出现Gdk-WARNING **: xx: Fatal IO error

解决办法:

1、sudo apt install libx11-dev

2、在代码中添加头文件#include <X11/Xlib.h>

3、在创建线程之前添加XInitThreads()

4、在编译的时候需要添加-lX11

加载全部内容