Vue悬浮框移动 录音

接着奏乐接着舞。 人气:0效果如下

主要功能

1.一个漂浮的球,在全屏幕中自由移动遇到边边角角自动改变方向 ,自带动画效果

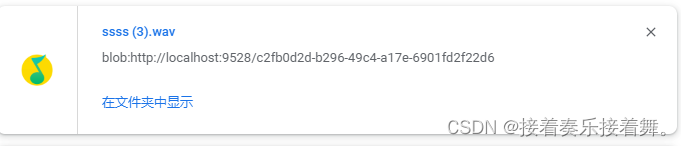

2.录音功能,可以录制用户的声音,可以下载为任意格式的音频文件到本地,也可以通过二进制流发给后端

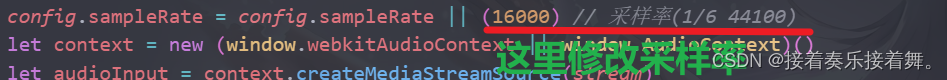

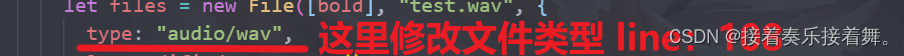

由于后端要声音文件格式wav或者pcm,采样率16000,所以我改了配置文件,稍后我会介绍在哪里改,改什么什么样都是可以的。

注:代码我已经封装成组件了,下方的代码可以直接稍作修改后拿去用,需要修改的地方我以截图的形式贴出来了。

实现

1.封装第一个漂浮组件FloatBall.vue

<template>

<!--悬浮小广告样式的提示信息-->

<div

id="thediv"

ref="thediv"

style="position: absolute; z-index: 111; left: 0; top: 0"

@mouseover="clearFdAd"

@mouseout="starFdAd"

>

<div

style="

overflow: hidden;

cursor: pointer;

text-align: right;

font-size: 0.0625rem;

color: #999999;

"

>

<div @click="demo" style="position: relative">

<!-- 录音组件 -->

<div v-show="isAudio">

<Audio style="position: absolute; top: 10%; right: 20%"></Audio>

</div>

<div class="loader">

<span></span>

<span></span>

<span></span>

<span></span>

<span></span>

<span></span>

<span></span>

<span></span>

<span></span>

<span></span>

</div>

<!-- <img src="@/assets/common/loginlogo.png" alt="" srcset="" /> -->

</div>

</div>

<!-- <a

href="http://xxxxxx" target="_blank""

><img src="../../assets/images/tips.png" width="320" border="0" /></a> -->

</div>

</template>

<script>

var interval

export default {

data () {

return {

isAudio: false,

xPos: 0,

yPos: 0,

xin: true,

yin: true,

step: 1,

delay: 18,

height: 0,

Hoffset: 0,

Woffset: 0,

yon: 0,

xon: 0,

pause: true,

thedivShow: true,

}

},

mounted () {

interval = setInterval(this.changePos, this.delay)

},

methods: {

demo () {

this.isAudio = !this.isAudio

},

changePos () {

let width = document.documentElement.clientWidth

let height = document.documentElement.clientHeight

this.Hoffset = this.$refs.thediv.clientHeight//获取元素高度

this.Woffset = this.$refs.thediv.offsetWidth

// 滚动部分跟随屏幕滚动

// this.$refs.thediv.style.left = (this.xPos + document.body.scrollLeft + document.documentElement.scrollLeft) + "px";

// this.$refs.thediv.style.top = (this.yPos + document.body.scrollTop + document.documentElement.scrollTop) + "px";

// 滚动部分不随屏幕滚动

this.$refs.thediv.style.left =

this.xPos + document.body.scrollLeft - 400 + "px"

this.$refs.thediv.style.top = this.yPos + document.body.scrollTop + "px"

if (this.yon) {

this.yPos = this.yPos + this.step

} else {

this.yPos = this.yPos - this.step

}

if (this.yPos < 0) {

this.yon = 1

this.yPos = 0

}

if (this.yPos >= height - this.Hoffset) {

this.yon = 0

this.yPos = height - this.Hoffset

}

if (this.xon) {

this.xPos = this.xPos + this.step

} else {

this.xPos = this.xPos - this.step

}

if (this.xPos < 0) {

this.xon = 1

this.xPos = 0

}

if (this.xPos >= width - this.Woffset) {

this.xon = 0

this.xPos = width - this.Woffset

}

},

clearFdAd () {

clearInterval(interval)

},

starFdAd () {

interval = setInterval(this.changePos, this.delay)

},

},

};

</script>

<style lang="scss" scoped>

#thediv {

z-index: 100;

position: absolute;

top: 0.224rem;

left: 0.0104rem;

height: 0.9583rem;

width: 1.4583rem;

overflow: hidden;

img {

width: 100%;

height: 100%;

}

}

// 以下是css图标

.loader {

width: 100px;

height: 100px;

padding: 30px;

font-size: 10px;

background-color: #91cc75;

border-radius: 50%;

border: 8px solid #20a088;

display: flex;

align-items: center;

justify-content: space-between;

animation: loader-animate 1.5s infinite ease-in-out;

}

@keyframes loader-animate {

45%,

55% {

transform: scale(1);

}

}

.loader > span {

width: 5px;

height: 50%;

background-color: #fff;

transform: scaleY(0.05) translateX(-5px);

animation: span-animate 1.5s infinite ease-in-out;

animation-delay: calc(var(--n) * 0.05s);

}

@keyframes span-animate {

0%,

100% {

transform: scaleY(0.05) translateX(-5px);

}

15% {

transform: scaleY(1.2) translateX(10px);

}

90%,

100% {

background-color: hotpink;

}

}

.loader > span:nth-child(1) {

--n: 1;

}

.loader > span:nth-child(2) {

--n: 2;

}

.loader > span:nth-child(3) {

--n: 3;

}

.loader > span:nth-child(4) {

--n: 4;

}

.loader > span:nth-child(5) {

--n: 5;

}

.loader > span:nth-child(6) {

--n: 6;

}

.loader > span:nth-child(7) {

--n: 7;

}

.loader > span:nth-child(8) {

--n: 8;

}

.loader > span:nth-child(9) {

--n: 9;

}

.loader > span:nth-child(10) {

--n: 10;

}

</style>

2.封装第二个组件录音组件Audio.vue

这个组件依赖另一个文件recorder.js,在最下方贴出来了。

<template>

<div>

<el-button @click="myrecording" style="margin-left: 1rem">{{

time

}}</el-button>

<el-button @click="startPlay" style="margin-left: 1rem">{{

playing ? "播放" : "暂停"

}}</el-button>

<el-button @click="delvioce" style="margin-left: 1rem; color: black"

>删除</el-button

>

<audio

v-if="fileurl"

:src="fileurl"

controls="controls"

style="display: none"

ref="audio"

id="myaudio"

></audio>

</div>

</template>

<script>

// 引入recorder.js

import recording from "@/utils/recorder"

export default {

data () {

return {

RecordingSwitch: true, //录音开关

files: "", //语音文件

num: 60, // 按住说话时间

recorder: null,

fileurl: "", //语音URL

interval: "", //定时器

time: "点击说话(60秒)",

playing: true,

}

},

methods: {

// 点击录制

myrecording () {

if (this.files === "") {

if (this.RecordingSwitch) {

this.Start()

} else {

this.End()

}

} else if (this.time === "点击重录(60秒)") {

this.files = ""

this.Start()

}

this.RecordingSwitch = !this.RecordingSwitch

},

// 点击播放

startPlay () {

console.dir(this.$refs.audio, '--------------------')

if (this.playing) {

this.$refs.audio.play()

} else {

this.$refs.audio.pause()

}

this.playing = !this.playing

},

// 删除语音

delvioce () {

this.fileurl = ""

this.files = ""

this.num = 60

this.time = "点击说话(60秒)"

},

// 清除定时器

clearTimer () {

if (this.interval) {

this.num = 60

clearInterval(this.interval)

}

},

// 开始录制

Start () {

this.clearTimer()

recording.get((rec) => {

// 当首次按下时,要获取浏览器的麦克风权限,所以这时要做一个判断处理

if (rec) {

this.recorder = rec

this.interval = setInterval(() => {

if (this.num <= 0) {

this.recorder.stop()

this.num = 60

this.End()

} else {

this.time = "点击结束(" + this.num + "秒)"

this.recorder.start()

this.num--

}

}, 1000)

}

})

},

// 停止录制

End () {

this.clearTimer()

if (this.recorder) {

this.recorder.stop() // 重置说话时间

this.num = 60

this.time = "点击重录(60秒)" // 获取语音二进制文件

let bold = this.recorder.getBlob() // 将获取的二进制对象转为二进制文件流

let files = new File([bold], "test.wav", {

type: "audio/wav",

lastModified: Date.now(),

})

this.files = files

console.log(this.files, '----------', bold, '---------------', this.recorder)

//获取音频时长

let fileurl = URL.createObjectURL(files)

this.fileurl = fileurl

let audioElement = new Audio(fileurl)

let duration

audioElement.addEventListener("loadedmetadata", function (_event) {

duration = audioElement.duration

console.log("视频时长:" + duration, files)

})

var downloadAnchorNode = document.createElement('a')

downloadAnchorNode.setAttribute("href", fileurl)

downloadAnchorNode.setAttribute("download", 'ssss')

downloadAnchorNode.click()

downloadAnchorNode.remove()

this.$message.success("正在下载中,请稍后...")

}

},

},

};

</script>

<style lang="less" >

</style>

3.recorder.js

// 兼容

window.URL = window.URL || window.webkitURL

navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia || navigator.msGetUserMedia

let HZRecorder = function (stream, config) {

config = config || {}

config.sampleBits = config.sampleBits || 8 // 采样数位 8, 16

config.sampleRate = config.sampleRate || (16000) // 采样率(1/6 44100)

let context = new (window.webkitAudioContext || window.AudioContext)()

let audioInput = context.createMediaStreamSource(stream)

let createScript = context.createScriptProcessor || context.createJavaScriptNode

let recorder = createScript.apply(context, [4096, 1, 1])

let audioData = {

size: 0, // 录音文件长度

buffer: [], // 录音缓存

inputSampleRate: context.sampleRate, // 输入采样率

inputSampleBits: 16, // 输入采样数位 8, 16

outputSampleRate: config.sampleRate, // 输出采样率

oututSampleBits: config.sampleBits, // 输出采样数位 8, 16

input: function (data) {

this.buffer.push(new Float32Array(data))

this.size += data.length

},

compress: function () { // 合并压缩

// 合并

let data = new Float32Array(this.size)

let offset = 0

for (let i = 0; i < this.buffer.length; i++) {

data.set(this.buffer[i], offset)

offset += this.buffer[i].length

}

// 压缩

let compression = parseInt(this.inputSampleRate / this.outputSampleRate)

let length = data.length / compression

let result = new Float32Array(length)

let index = 0; let j = 0

while (index < length) {

result[index] = data[j]

j += compression

index++

}

return result

},

encodeWAV: function () {

let sampleRate = Math.min(this.inputSampleRate, this.outputSampleRate)

let sampleBits = Math.min(this.inputSampleBits, this.oututSampleBits)

let bytes = this.compress()

let dataLength = bytes.length * (sampleBits / 8)

let buffer = new ArrayBuffer(44 + dataLength)

let data = new DataView(buffer)

let channelCount = 1// 单声道

let offset = 0

let writeString = function (str) {

for (let i = 0; i < str.length; i++) {

data.setUint8(offset + i, str.charCodeAt(i))

}

}

// 资源交换文件标识符

writeString('RIFF'); offset += 4

// 下个地址开始到文件尾总字节数,即文件大小-8

data.setUint32(offset, 36 + dataLength, true); offset += 4

// WAV文件标志

writeString('WAVE'); offset += 4

// 波形格式标志

writeString('fmt '); offset += 4

// 过滤字节,一般为 0x10 = 16

data.setUint32(offset, 16, true); offset += 4

// 格式类别 (PCM形式采样数据)

data.setUint16(offset, 1, true); offset += 2

// 通道数

data.setUint16(offset, channelCount, true); offset += 2

// 采样率,每秒样本数,表示每个通道的播放速度

data.setUint32(offset, sampleRate, true); offset += 4

// 波形数据传输率 (每秒平均字节数) 单声道×每秒数据位数×每样本数据位/8

data.setUint32(offset, channelCount * sampleRate * (sampleBits / 8), true); offset += 4

// 快数据调整数 采样一次占用字节数 单声道×每样本的数据位数/8

data.setUint16(offset, channelCount * (sampleBits / 8), true); offset += 2

// 每样本数据位数

data.setUint16(offset, sampleBits, true); offset += 2

// 数据标识符

writeString('data'); offset += 4

// 采样数据总数,即数据总大小-44

data.setUint32(offset, dataLength, true); offset += 4

// 写入采样数据

if (sampleBits === 8) {

for (let i = 0; i < bytes.length; i++, offset++) {

let s = Math.max(-1, Math.min(1, bytes[i]))

let val = s < 0 ? s * 0x8000 : s * 0x7FFF

val = parseInt(255 / (65535 / (val + 32768)))

data.setInt8(offset, val, true)

}

} else {

for (let i = 0; i < bytes.length; i++, offset += 2) {

let s = Math.max(-1, Math.min(1, bytes[i]))

data.setInt16(offset, s < 0 ? s * 0x8000 : s * 0x7FFF, true)

}

}

return new Blob([data], { type: 'audio/mp3' })

}

}

// 开始录音

this.start = function () {

audioInput.connect(recorder)

recorder.connect(context.destination)

}

// 停止

this.stop = function () {

recorder.disconnect()

}

// 获取音频文件

this.getBlob = function () {

this.stop()

return audioData.encodeWAV()

}

// 回放

this.play = function (audio) {

let downRec = document.getElementById('downloadRec')

downRec.href = window.URL.createObjectURL(this.getBlob())

downRec.download = new Date().toLocaleString() + '.mp3'

audio.src = window.URL.createObjectURL(this.getBlob())

}

// 上传

this.upload = function (url, callback) {

let fd = new FormData()

fd.append('audioData', this.getBlob())

let xhr = new XMLHttpRequest()

/* eslint-disable */

if (callback) {

xhr.upload.addEventListener('progress', function (e) {

callback('uploading', e)

}, false)

xhr.addEventListener('load', function (e) {

callback('ok', e)

}, false)

xhr.addEventListener('error', function (e) {

callback('error', e)

}, false)

xhr.addEventListener('abort', function (e) {

callback('cancel', e)

}, false)

}

/* eslint-disable */

xhr.open('POST', url)

xhr.send(fd)

}

// 音频采集

recorder.onaudioprocess = function (e) {

audioData.input(e.inputBuffer.getChannelData(0))

// record(e.inputBuffer.getChannelData(0));

}

}

// 抛出异常

HZRecorder.throwError = function (message) {

alert(message)

throw new function () { this.toString = function () { return message } }()

}

// 是否支持录音

HZRecorder.canRecording = (navigator.getUserMedia != null)

// 获取录音机

HZRecorder.get = function (callback, config) {

if (callback) {

if (navigator.getUserMedia) {

console.log(navigator)

navigator.getUserMedia(

{ audio: true } // 只启用音频

, function (stream) {

let rec = new HZRecorder(stream, config)

callback(rec)

}

, function (error) {

console.log(error)

switch (error.code || error.name) {

case 'PERMISSION_DENIED':

case 'PermissionDeniedError':

HZRecorder.throwError('用户拒绝提供信息。')

break

case 'NOT_SUPPORTED_ERROR':

case 'NotSupportedError':

HZRecorder.throwError('浏览器不支持硬件设备。')

break

case 'MANDATORY_UNSATISFIED_ERROR':

case 'MandatoryUnsatisfiedError':

HZRecorder.throwError('无法发现指定的硬件设备。')

break

default:

HZRecorder.throwError('无法打开麦克风。异常信息:' + (error.code || error.name))

break

}

})

} else {

HZRecorder.throwErr('当前浏览器不支持录音功能。'); return

}

}

}

export default HZRecorder加载全部内容