python爬取豆瓣评论

大学生编程地 人气:0一、爬取豆瓣热评

该程序进行爬取豆瓣热评,将爬取的评论(json文件)保存到与该python文件同一级目录下

注意需要下载这几个库:requests、lxml、json、time

import requests

from lxml import etree

import json

import time

class Spider(object):

def __init__(self):

#seif.ure='https://movie.douban.com/subject/23885074/reviews?start=0'

self.headers={

'User-Agent':'Mozilla/5.0(Windows NT6.1;Win64;x64)AppleWebKit/537.36(KHTML,like Gecko)Chrome/75.0.3700.100Safari/537.36'

}

def get_data(self,url):

response = requests.get(url,headers=self.headers).content.decode('utf-8')

page=etree.HTML(response)#xpath 对象

#获取所有数据节点

node_list = page.xpath('//div[@class="review-list "]/div')

for node in node_list:

#作者

author = node.xpath('.//header[@class="main-hd"]//a[2]/text()')[0]

#评论

text = node.xpath('string(.//div[@class="main-bd"]//div[@class="short-content"])')

items={

'author':author,

'text':text.strip()

}

#持久化存储

with open('yewen.json','a',encoding='utf-8') as f:

f.write(json.dumps(items,ensure_ascii=False)+'\n')

def run(self):

for i in range(1,47):

url='https://movie.douban.com/subject/26885074/reviews?start{}'.format(i*20)

print('正在爬取第{}页'.format(i))

self.get_data(url)

time.sleep(3)

if __name__=='__main__':

s=Spider()

s.run()

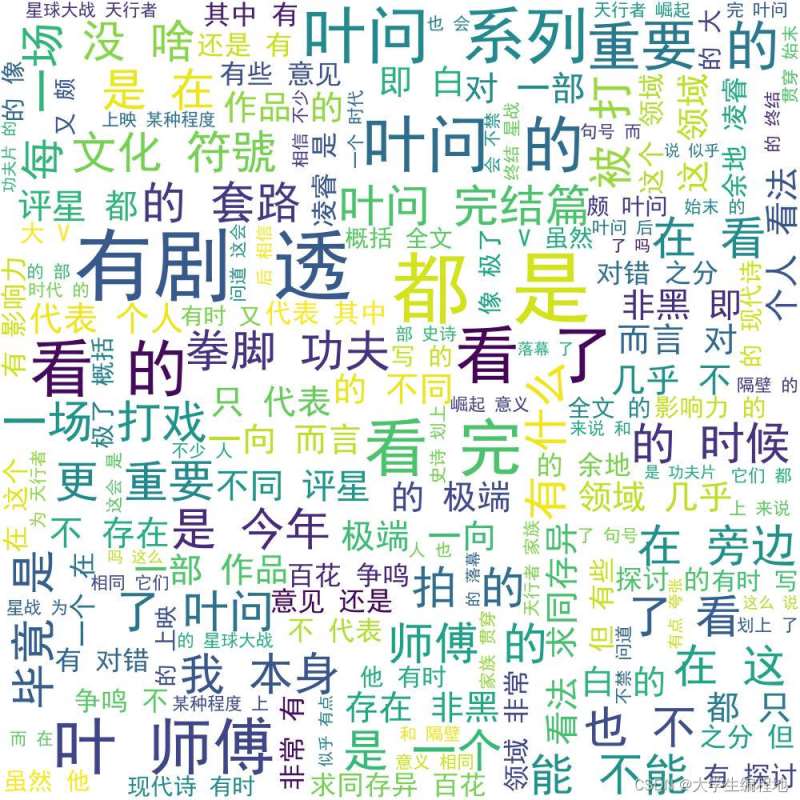

二、制作词云

该程序将json中的数据进行处理,提取重要信息,并用wordcloud库制作词云图片,同样保存到与该python文件同一级目录下

注意需要下载这几个库:jieba、wordcloud、json

import jieba

from wordcloud import WordCloud

import json

f= open("yewen.json", "r", encoding="utf-8")

data_list= f.readlines()

str =''

for data in data_list:

text= json.loads(data)['text']

str +=text

#替换无关紧要的词语

result_str = str.replace('展开', '').replace('这篇','').replace('影评','').replace('电影','').replace('这部', '').replace('可能', '').replace('剧情','')

cut_text = jieba.lcut(result_str)

result = " ".join(cut_text)

wc = WordCloud(font_path='simhei.ttf',

background_color="white",

max_words=600,

width=1000,

height=1000,

min_font_size=20,

max_font_size=100,)

#mast=plt.imreda('snake.jpg')#背景图片

wc.generate(result)#转化为词云的操作

wc.to_file("text.jpg")#保存

f.close()

总结

加载全部内容