elasticsearch bucket 之rare terms聚合使用详解

huan1993 人气:01、背景

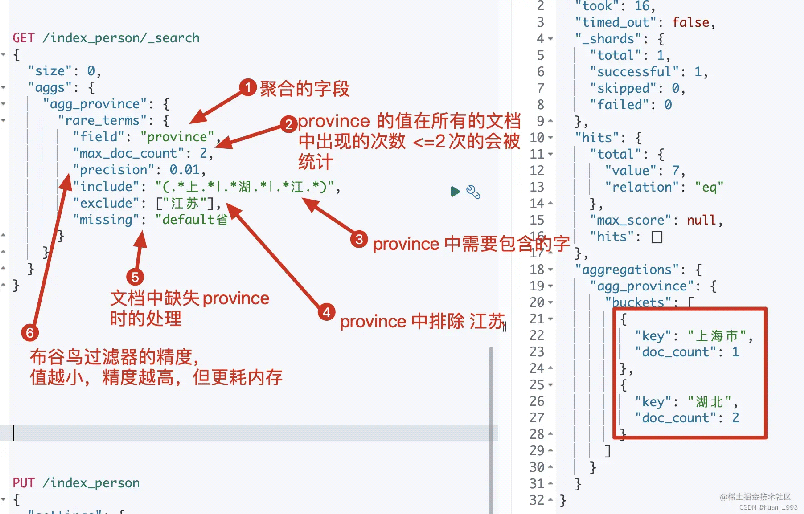

我们知道当我们使用 terms聚合时,当修改默认顺序为_count asc时,统计的结果是不准备的,而且官方也不推荐我们这样做,而是推荐使用rare terms聚合。rare terms是一个稀少的term聚合,可以一定程度的解决升序问题。

2、需求

统计province字段中包含上和湖的term数据,并且最多只能出现2次。获取到聚合后的结果。

3、前置准备

3.1 准备mapping

PUT /index_person

{

"settings": {

"number_of_shards": 1

},

"mappings": {

"properties": {

"id": {

"type": "long"

},

"name": {

"type": "keyword"

},

"province": {

"type": "keyword"

},

"sex": {

"type": "keyword"

},

"age": {

"type": "integer"

},

"pipeline_province_sex":{

"type": "keyword"

},

"address": {

"type": "text",

"analyzer": "ik_max_word",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

}

}

}

}

3.2 准备数据

PUT /_bulk

{"create":{"_index":"index_person","_id":1}}

{"id":1,"name":"张三","sex":"男","age":20,"province":"湖北","address":"湖北省黄冈市罗田县匡河镇"}

{"create":{"_index":"index_person","_id":2}}

{"id":2,"name":"李四","sex":"男","age":19,"province":"江苏","address":"江苏省南京市"}

{"create":{"_index":"index_person","_id":3}}

{"id":3,"name":"王武","sex":"女","age":25,"province":"湖北","address":"湖北省武汉市江汉区"}

{"create":{"_index":"index_person","_id":4}}

{"id":4,"name":"赵六","sex":"女","age":30,"province":"北京","address":"北京市东城区"}

{"create":{"_index":"index_person","_id":5}}

{"id":5,"name":"钱七","sex":"女","age":16,"province":"北京","address":"北京市西城区"}

{"create":{"_index":"index_person","_id":6}}

{"id":6,"name":"王八","sex":"女","age":45,"province":"北京","address":"北京市朝阳区"}

{"create":{"_index":"index_person","_id":7}}

{"id":7,"name":"九哥","sex":"男","age":25,"province":"上海市","address":"上海市嘉定区"}

4、实现需求

4.1 dsl

GET /index_person/_search

{

"size": 0,

"aggs": {

"agg_province": {

"rare_terms": {

"field": "province",

"max_doc_count": 2,

"precision": 0.01,

"include": "(.*上.*|.*湖.*|.*江.*)",

"exclude": ["江苏"],

"missing": "default省"

}

}

}

}

4.2 java代码

@Test

@DisplayName("稀少的term聚合,类似按照 _count asc 排序的terms聚合,但是terms聚合中按照_count asc的结果是不准的,需要使用 rare terms 聚合")

public void agg01() throws IOException {

SearchRequest searchRequest = new SearchRequest.Builder()

.size(0)

.index("index_person")

.aggregations("agg_province", agg ->

agg.rareTerms(rare ->

// 稀有词 的字段

rare.field("province")

// 该稀有词最多可以出现在几个文档中,最大值为100,如果要调整,需要修改search.max_buckets参数的值(尝试修改这个值,不生效)

// 在该例子中,只要是出现的次数<=2的聚合都会返回

.maxDocCount(2L)

// 内部布谷鸟过滤器的精度,精度越小越准,但是相应的消耗内存也越多,最小值为 0.00001,默认值为 0.01

.precision(0.01)

// 应该包含在聚合的term, 当是单个字段是,可以写正则表达式

.include(include -> include.regexp("(.*上.*|.*湖.*|.*江.*)"))

// 排出在聚合中的term,当是集合时,需要写准确的值

.exclude(exclude -> exclude.terms(Collections.singletonList("江苏")))

// 当文档中缺失province字段时,给默认值

.missing("default省")

)

)

.build();

System.out.println(searchRequest);

SearchResponse<Object> response = client.search(searchRequest, Object.class);

System.out.println(response);

}

一些注意事项都在注释中。

4.3 运行结果

5、max_doc_count 和 search.max_buckets

6、注意事项

rare terms统计返回的数据没有大小限制,而且受max_doc_count参数的限制,比如:如果复合 max_doc_count 的分组有60个,那么这60个分组会直接返回。max_doc_count的值最大为100,貌似不能修改。- 如果一台节点聚合收集的结果过多,那么很容易超过

search.max_buckets的值,此时就需要修改这个值。

# 临时修改

PUT /_cluster/settings

{"transient": {"search.max_buckets": 65536}}

# 永久修改

PUT /_cluster/settings

{"persistent": {"search.max_buckets": 65536}}

完整代码

参考文档

加载全部内容