Kubernetes 日志监控

愿许浪尽天涯 人气:0一、基本介绍

Loki 是由 Grafana Labs 团队开发的,基于 Go 语言实现,是一个水平可扩展,高可用性,多租户的日志聚合系统。它的设计非常经济高效且易于操作,因为它不会为日志内容编制索引,而是为每个日志流配置一组标签。Loki 项目受 Prometheus 启发。

官方的介绍就是:Like Prometheus, but for logs,类似于 Prometheus 的日志系统。

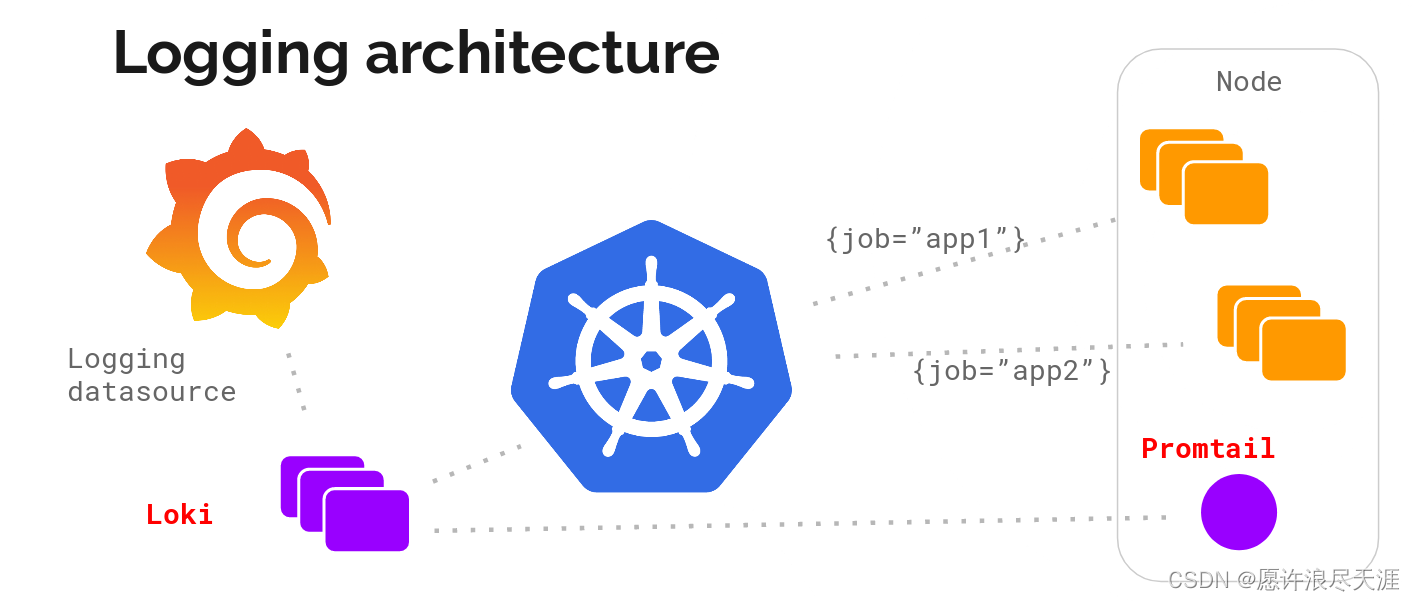

1.Loki 架构

Loki:主服务,用于存储日志和处理查询。Promtail:代理服务,用于采集日志,并转发给 Loki。Grafana:通过 Web 界面来提供数据展示、查询、告警等功能。

2.Loki 工作原理

首先由 Promtail 进行日志采集,并发送给 Distributor 组件,Distributor 组件会对接收到的日志流进行正确性校验,并将验证后的日志分批并行发送给 Ingester 组件。Ingester 组件会将接收过来的日志流构建成数据块,并进行压缩后存放到所连接的后端存储中。

Querier 组件,用于接收 HTTP 查询请求,并将查询请求转发给 Ingester 组件,来返回存在 Ingester 内存中的数据。要是在 Ingester 的内存中没有找到符合条件的数据时,那么 Querier 组件便会直接在后端存储中进行查询(内置去重功能)。

二、使用 Loki 实现容器日志监控

1.安装 Loki

1)创建 RBAC 授权

[root@k8s-master01 ~]# cat <<END > loki-rbac.yaml apiVersion: v1 kind: Namespace metadata: name: logging --- apiVersion: v1 kind: ServiceAccount metadata: name: loki namespace: logging --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: loki namespace: logging rules: - apiGroups: ["extensions"] resources: ["podsecuritypolicies"] verbs: ["use"] resourceNames: [loki] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: loki namespace: logging roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: loki subjects: - kind: ServiceAccount name: loki END [root@k8s-master01 ~]# kubectl create -f loki-rbac.yaml

2)创建 ConfigMap 文件

[root@k8s-master01 ~]# cat <<END > loki-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: loki

namespace: logging

labels:

app: loki

data:

loki.yaml: |

auth_enabled: false

ingester:

chunk_idle_period: 3m

chunk_block_size: 262144

chunk_retain_period: 1m

max_transfer_retries: 0

lifecycler:

ring:

kvstore:

store: inmemory

replication_factor: 1

limits_config:

enforce_metric_name: false

reject_old_samples: true

reject_old_samples_max_age: 168h

schema_config:

configs:

- from: "2022-05-15"

store: boltdb-shipper

object_store: filesystem

schema: v11

index:

prefix: index_

period: 24h

server:

http_listen_port: 3100

storage_config:

boltdb_shipper:

active_index_directory: /data/loki/boltdb-shipper-active

cache_location: /data/loki/boltdb-shipper-cache

cache_ttl: 24h

shared_store: filesystem

filesystem:

directory: /data/loki/chunks

chunk_store_config:

max_look_back_period: 0s

table_manager:

retention_deletes_enabled: true

retention_period: 48h

compactor:

working_directory: /data/loki/boltdb-shipper-compactor

shared_store: filesystem

END

[root@k8s-master01 ~]# kubectl create -f loki-configmap.yaml

3)创建 StatefulSet

[root@k8s-master01 ~]# cat <<END > loki-statefulset.yaml

apiVersion: v1

kind: Service

metadata:

name: loki

namespace: logging

labels:

app: loki

spec:

type: NodePort

ports:

- port: 3100

protocol: TCP

name: http-metrics

targetPort: http-metrics

nodePort: 30100

selector:

app: loki

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: loki

namespace: logging

labels:

app: loki

spec:

podManagementPolicy: OrderedReady

replicas: 1

selector:

matchLabels:

app: loki

serviceName: loki

updateStrategy:

type: RollingUpdate

template:

metadata:

labels:

app: loki

spec:

serviceAccountName: loki

initContainers:

- name: chmod-data

image: busybox:1.28.4

imagePullPolicy: IfNotPresent

command: ["chmod","-R","777","/loki/data"]

volumeMounts:

- name: storage

mountPath: /loki/data

containers:

- name: loki

image: grafana/loki:2.3.0

imagePullPolicy: IfNotPresent

args:

- -config.file=/etc/loki/loki.yaml

volumeMounts:

- name: config

mountPath: /etc/loki

- name: storage

mountPath: /data

ports:

- name: http-metrics

containerPort: 3100

protocol: TCP

livenessProbe:

httpGet:

path: /ready

port: http-metrics

scheme: HTTP

initialDelaySeconds: 45

readinessProbe:

httpGet:

path: /ready

port: http-metrics

scheme: HTTP

initialDelaySeconds: 45

securityContext:

readOnlyRootFilesystem: true

terminationGracePeriodSeconds: 4800

volumes:

- name: config

configMap:

name: loki

- name: storage

hostPath:

path: /app/loki

END

[root@k8s-master01 ~]# kubectl create -f loki-statefulset.yaml

2.安装 Promtail

1)创建 RBAC 授权文件

[root@k8s-master01 ~]# cat <<END > promtail-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: loki-promtail

labels:

app: promtail

namespace: logging

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

app: promtail

name: promtail-clusterrole

namespace: logging

rules:

- apiGroups: [""]

resources: ["nodes","nodes/proxy","services","endpoints","pods"]

verbs: ["get", "watch", "list"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: promtail-clusterrolebinding

labels:

app: promtail

namespace: logging

subjects:

- kind: ServiceAccount

name: loki-promtail

namespace: logging

roleRef:

kind: ClusterRole

name: promtail-clusterrole

apiGroup: rbac.authorization.k8s.io

END

[root@k8s-master01 ~]# kubectl create -f promtail-rbac.yaml

2)创建 ConfigMap 文件

Promtail 配置文件:官方介绍

[root@k8s-master01 ~]# cat <<"END" > promtail-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: loki-promtail

namespace: logging

labels:

app: promtail

data:

promtail.yaml: |

client:

backoff_config:

max_period: 5m

max_retries: 10

min_period: 500ms

batchsize: 1048576

batchwait: 1s

external_labels: {}

timeout: 10s

positions:

filename: /run/promtail/positions.yaml

server:

http_listen_port: 3101

target_config:

sync_period: 10s

scrape_configs:

- job_name: kubernetes-pods-name

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels:

- __meta_kubernetes_pod_label_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- job_name: kubernetes-pods-app

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

source_labels:

- __meta_kubernetes_pod_label_name

- source_labels:

- __meta_kubernetes_pod_label_app

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- job_name: kubernetes-pods-direct-controllers

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

separator: ''

source_labels:

- __meta_kubernetes_pod_label_name

- __meta_kubernetes_pod_label_app

- action: drop

regex: '[0-9a-z-.]+-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

- source_labels:

- __meta_kubernetes_pod_controller_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- job_name: kubernetes-pods-indirect-controller

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

separator: ''

source_labels:

- __meta_kubernetes_pod_label_name

- __meta_kubernetes_pod_label_app

- action: keep

regex: '[0-9a-z-.]+-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

- action: replace

regex: '([0-9a-z-.]+)-[0-9a-f]{8,10}'

source_labels:

- __meta_kubernetes_pod_controller_name

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- job_name: kubernetes-pods-static

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: ''

source_labels:

- __meta_kubernetes_pod_annotation_kubernetes_io_config_mirror

- action: replace

source_labels:

- __meta_kubernetes_pod_label_component

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- action: replace

source_labels:

- __meta_kubernetes_pod_container_name

target_label: container

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_annotation_kubernetes_io_config_mirror

- __meta_kubernetes_pod_container_name

target_label: __path__

END

[root@k8s-master01 ~]# kubectl create -f promtail-configmap.yaml

3)创建 DaemonSet 文件

[root@k8s-master01 ~]# cat <<END > promtail-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: loki-promtail

namespace: logging

labels:

app: promtail

spec:

selector:

matchLabels:

app: promtail

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: promtail

spec:

serviceAccountName: loki-promtail

containers:

- name: promtail

image: grafana/promtail:2.3.0

imagePullPolicy: IfNotPresent

args:

- -config.file=/etc/promtail/promtail.yaml

- -client.url=http://192.168.1.1:30100/loki/api/v1/push

env:

- name: HOSTNAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

volumeMounts:

- mountPath: /etc/promtail

name: config

- mountPath: /run/promtail

name: run

- mountPath: /data/k8s/docker/data/containers

name: docker

readOnly: true

- mountPath: /var/log/pods

name: pods

readOnly: true

ports:

- containerPort: 3101

name: http-metrics

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsGroup: 0

runAsUser: 0

readinessProbe:

failureThreshold: 5

httpGet:

path: /ready

port: http-metrics

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Exists

volumes:

- name: config

configMap:

name: loki-promtail

- name: run

hostPath:

path: /run/promtail

type: ""

- name: docker

hostPath:

path: /data/k8s/docker/data/containers

- name: pods

hostPath:

path: /var/log/pods

END

[root@k8s-master01 ~]# kubectl create -f promtail-daemonset.yaml

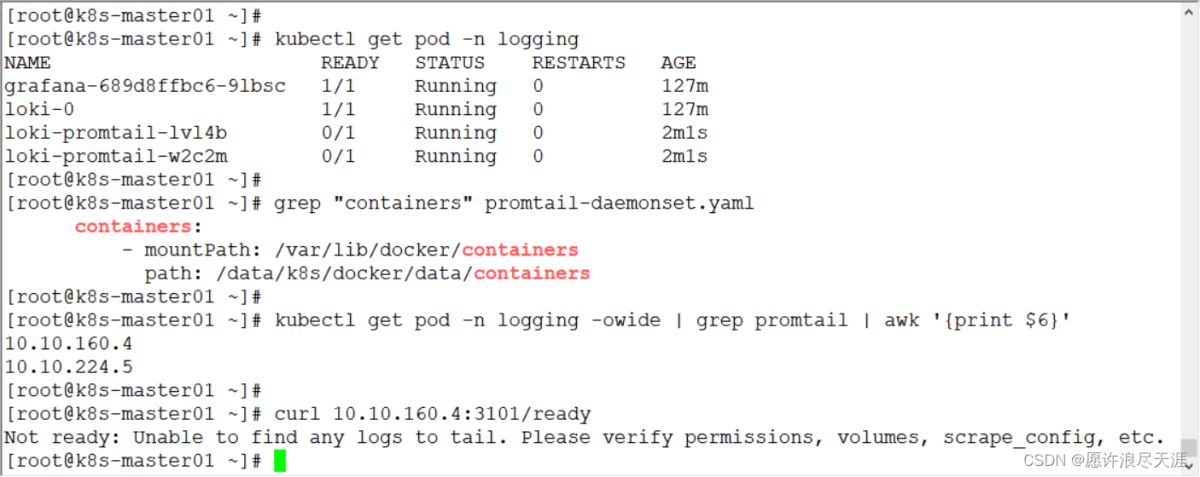

4)Promtail 关键配置

volumeMounts:

- mountPath: /data/k8s/docker/data/containers

name: docker

readOnly: true

- mountPath: /var/log/pods

name: pods

readOnly: true

volumes:

- name: docker

hostPath:

path: /data/k8s/docker/data/containers

- name: pods

hostPath:

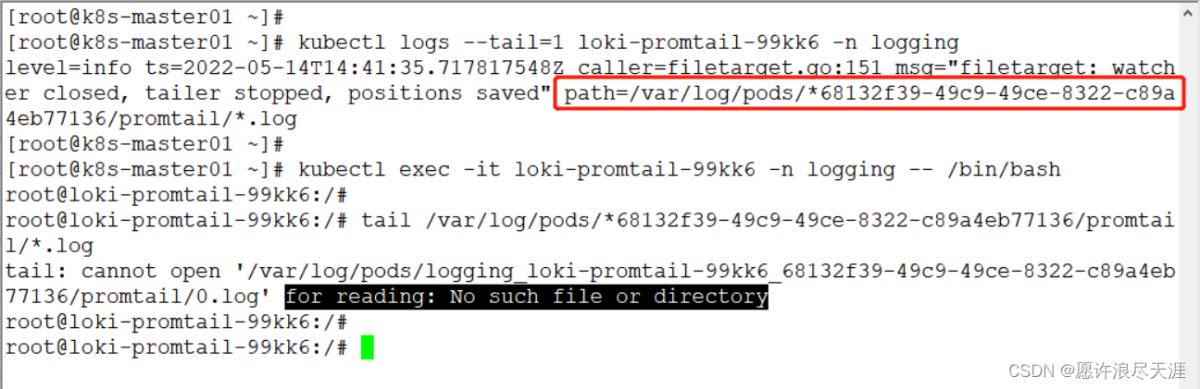

path: /var/log/pods这里需要注意,hostPath 和 mountPath 配置的路径要相同(这里说的相同指的是,要和宿主机的容器目录相同),因为 Promtail 在读取容器内的日志时,会通过 K8s 的 API 接口来返回容器信息(通过源路径取的)。如果配置的不同,将会导致 httpGet 检查失败。

输出:Not ready: Unable to find any logs to tail. Please verify permissions, volumes, scrape_config, etc. 信息,原因可能就是因为挂载路径没有匹配上,导致 Promtail tail 不到日志文件。可以先手动将就绪检查关闭,来查看 Promtail 容器的日志。

3.安装 Grafana

[root@k8s-master01 ~]# cat <<END > grafana-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

labels:

app: grafana

namespace: logging

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana:8.4.7

imagePullPolicy: IfNotPresent

env:

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

resources:

requests:

cpu: 100m

memory: 200Mi

limits:

cpu: '1'

memory: 2Gi

readinessProbe:

httpGet:

path: /login

port: 3000

volumeMounts:

- name: storage

mountPath: /var/lib/grafana

volumes:

- name: storage

hostPath:

path: /app/grafana

---

apiVersion: v1

kind: Service

metadata:

name: grafana

labels:

app: grafana

namespace: logging

spec:

type: NodePort

ports:

- port: 3000

targetPort: 3000

nodePort: 30030

selector:

app: grafana

END

[root@k8s-master01 ~]# mkdir -p /app/grafana

[root@k8s-master01 ~]# chmod -R 777 /app/grafana

[root@k8s-master01 ~]# kubectl create -f grafana-deploy.yaml

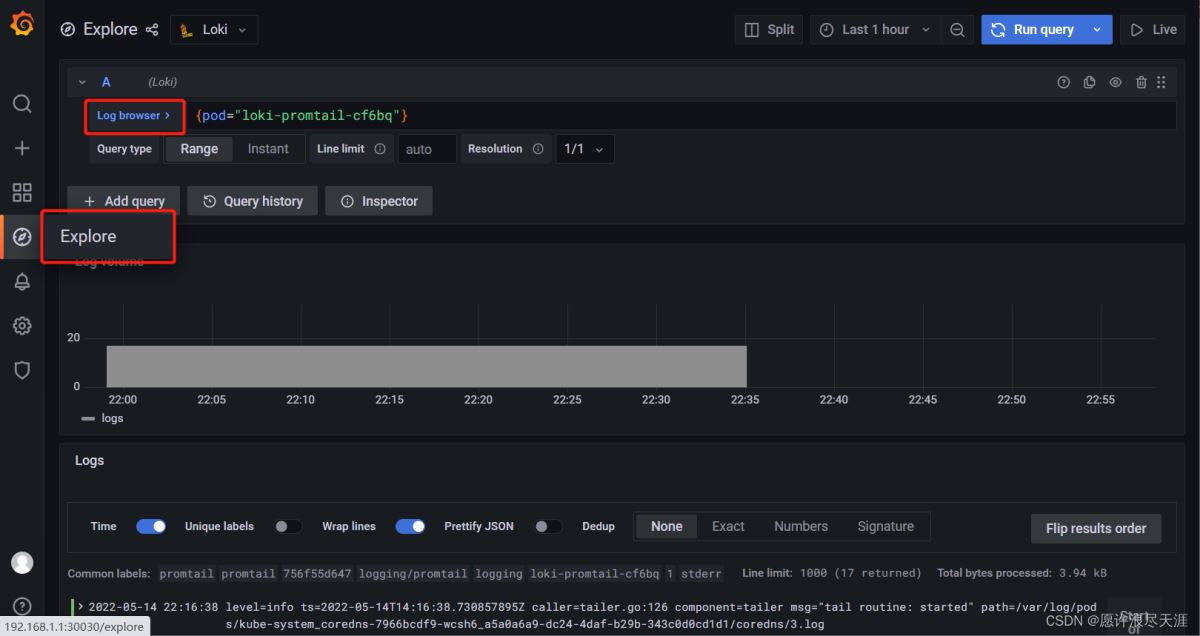

4.验证

访问 Grafana 控制台:http://192.168.1.1:30030(账号密码:admin/admin)

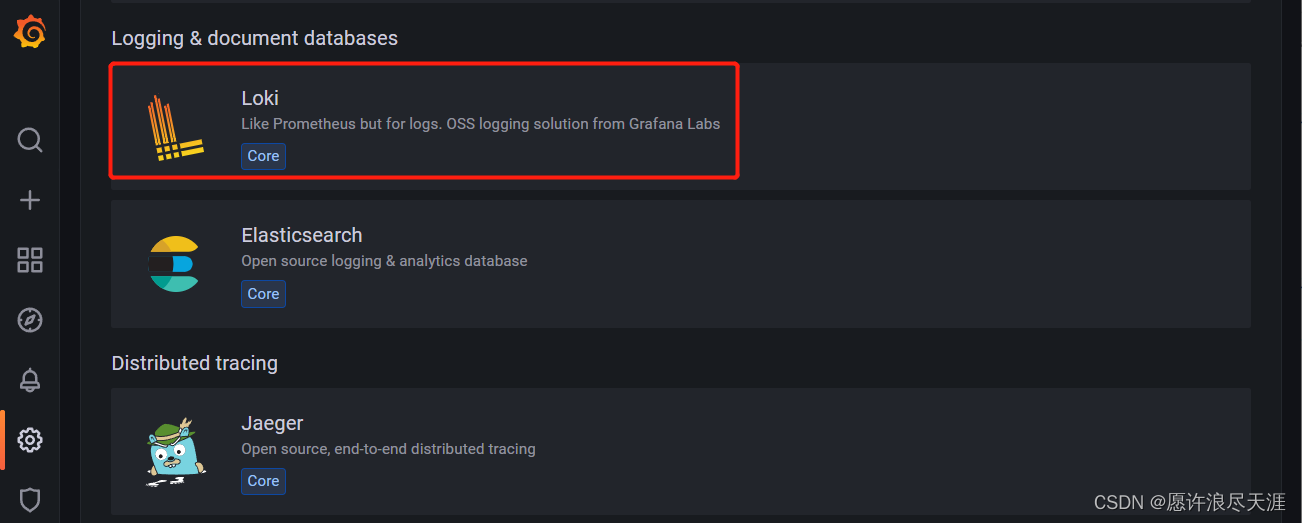

1)配置数据源

2)选择 Loki 数据源

3)配置 Loki 的 URL 地址

4)验证

加载全部内容