Sharding-JDBC数据分片处理 使用Sharding-JDBC对数据进行分片处理详解

1024程序猿~嗷~ 人气:0想了解使用Sharding-JDBC对数据进行分片处理详解的相关内容吗,1024程序猿~嗷~在本文为您仔细讲解Sharding-JDBC数据分片处理的相关知识和一些Code实例,欢迎阅读和指正,我们先划重点:Sharding-JDBC数据,数据分片处理,下面大家一起来学习吧。

前言

Sharding-JDBC是ShardingSphere的第一个产品,也是ShardingSphere的前身。

它定位为轻量级Java框架,在Java的JDBC层提供的额外服务。它使用客户端直连数据库,以jar包形式提供服务,无需额外部署和依赖,可理解为增强版的JDBC驱动,完全兼容JDBC和各种ORM框架。

- 适用于任何基于Java的ORM框架,如:JPA, Hibernate, Mybatis, Spring JDBC Template或直接使用JDBC。

- 基于任何第三方的数据库连接池,如:DBCP, C3P0, BoneCP, Druid, HikariCP等支持任意实现JDBC规范的数据库。

- 目前支持MySQL,Oracle,SQLServer和PostgreSQL。

Sharding-JDBC的使用需要我们对项目进行一些调整:结构如下

这里使用的是springBoot项目改造

一、加入依赖

<!-- 这里使用了druid连接池 -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.1.9</version>

</dependency>

<!-- sharding-jdbc 包 -->

<dependency>

<groupId>com.dangdang</groupId>

<artifactId>sharding-jdbc-core</artifactId>

<version>1.5.4</version>

</dependency>

<!-- 这里使用了雪花算法生成组建,这个算法的实现的自己写的代码,各位客关老爷可以修改为自己的id生成策略 -->

<dependency>

<groupId>org.kcsm.common</groupId>

<artifactId>kcsm-idgenerator</artifactId>

<version>3.0.1</version>

</dependency>

二、修改application.yml配置文件

#启动接口

server:

port: 30009

spring:

jpa:

database: mysql

show-sql: true

hibernate:

# 修改不自动更新表

ddl-auto: none

#数据源0定义,这里只是用了一个数据源,各位客官可以根据自己的需求定义多个数据源

database0:

databaseName: database0

url: jdbc:mysql://kcsm-pre.mysql.rds.aliyuncs.com:3306/dstest?characterEncoding=utf8&useUnicode=true&useSSL=false&serverTimezone=Hongkong

username: root

password: kcsm@111

driverClassName: com.mysql.jdbc.Driver

三、数据源定义

package com.lzx.code.codedemo.config;

import com.alibaba.druid.pool.DruidDataSource;

import lombok.Data;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.stereotype.Component;

import javax.sql.DataSource;

/**

* 描述:数据源0定义

*

* @Auther: lzx

* @Date: 2019/9/9 15:19

*/

@Data

@ConfigurationProperties(prefix = "database0")

@Component

public class Database0Config {

private String url;

private String username;

private String password;

private String driverClassName;

private String databaseName;

public DataSource createDataSource() {

DruidDataSource result = new DruidDataSource();

result.setDriverClassName(getDriverClassName());

result.setUrl(getUrl());

result.setUsername(getUsername());

result.setPassword(getPassword());

return result;

}

}

四、数据源分配算法实现

package com.lzx.code.codedemo.config;

import com.dangdang.ddframe.rdb.sharding.api.ShardingValue;

import com.dangdang.ddframe.rdb.sharding.api.strategy.database.SingleKeyDatabaseShardingAlgorithm;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Component;

import java.util.ArrayList;

import java.util.Collection;

import java.util.List;

/**

* 描述:数据源分配算法

*

* 这里我们只用了一个数据源,所以所有的都只返回了数据源0

*

* @Auther: lzx

* @Date: 2019/9/9 15:27

*/

@Component

public class DatabaseShardingAlgorithm implements SingleKeyDatabaseShardingAlgorithm {

@Autowired

private Database0Config database0Config;

/**

* = 条件时候返回的数据源

* @param collection

* @param shardingValue

* @return

*/

@Override

public String doEqualSharding(Collection collection, ShardingValue shardingValue) {

return database0Config.getDatabaseName();

}

/**

* IN 条件返回的数据源

* @param collection

* @param shardingValue

* @return

*/

@Override

public Collection<String> doInSharding(Collection collection, ShardingValue shardingValue) {

List<String> result = new ArrayList<String>();

result.add(database0Config.getDatabaseName());

return result;

}

/**

* BETWEEN 条件放回的数据源

* @param collection

* @param shardingValue

* @return

*/

@Override

public Collection<String> doBetweenSharding(Collection collection, ShardingValue shardingValue) {

List<String> result = new ArrayList<String>();

result.add(database0Config.getDatabaseName());

return result;

}

}

五、数据表分配算法

package com.lzx.code.codedemo.config;

import com.dangdang.ddframe.rdb.sharding.api.ShardingValue;

import com.dangdang.ddframe.rdb.sharding.api.strategy.table.SingleKeyTableShardingAlgorithm;

import com.google.common.collect.Range;

import org.springframework.stereotype.Component;

import java.util.Collection;

import java.util.LinkedHashSet;

/**

* 描述: 数据表分配算法的实现

*

* @Auther: lzx

* @Date: 2019/9/9 16:19

*/

@Component

public class TableShardingAlgorithm implements SingleKeyTableShardingAlgorithm<Long> {

/**

* = 条件时候返回的数据源

* @param collection

* @param shardingValue

* @return

*/

@Override

public String doEqualSharding(Collection<String> collection, ShardingValue<Long> shardingValue) {

for (String eaach:collection) {

Long value = shardingValue.getValue();

value = value >> 22;

if(eaach.endsWith(value%10+"")){

return eaach;

}

}

throw new IllegalArgumentException();

}

/**

* IN 条件返回的数据源

* @param tableNames

* @param shardingValue

* @return

*/

@Override

public Collection<String> doInSharding(Collection<String> tableNames, ShardingValue<Long> shardingValue) {

Collection<String> result = new LinkedHashSet<>(tableNames.size());

for (Long value : shardingValue.getValues()) {

for (String tableName : tableNames) {

value = value >> 22;

if (tableName.endsWith(value % 10 + "")) {

result.add(tableName);

}

}

}

return result;

}

/**

* BETWEEN 条件放回的数据源

* @param tableNames

* @param shardingValue

* @return

*/

@Override

public Collection<String> doBetweenSharding(Collection<String> tableNames, ShardingValue<Long> shardingValue) {

Collection<String> result = new LinkedHashSet<>(tableNames.size());

Range<Long> range = shardingValue.getValueRange();

for (Long i = range.lowerEndpoint(); i <= range.upperEndpoint(); i++) {

for (String each : tableNames) {

Long value = i >> 22;

if (each.endsWith(i % 10 + "")) {

result.add(each);

}

}

}

return result;

}

}

六、数据源配置

package com.lzx.code.codedemo.config;

import com.dangdang.ddframe.rdb.sharding.api.ShardingDataSourceFactory;

import com.dangdang.ddframe.rdb.sharding.api.rule.DataSourceRule;

import com.dangdang.ddframe.rdb.sharding.api.rule.ShardingRule;

import com.dangdang.ddframe.rdb.sharding.api.rule.TableRule;

import com.dangdang.ddframe.rdb.sharding.api.strategy.database.DatabaseShardingStrategy;

import com.dangdang.ddframe.rdb.sharding.api.strategy.table.TableShardingStrategy;

import com.dangdang.ddframe.rdb.sharding.keygen.DefaultKeyGenerator;

import com.dangdang.ddframe.rdb.sharding.keygen.KeyGenerator;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import javax.sql.DataSource;

import java.sql.SQLException;

import java.util.Arrays;

import java.util.HashMap;

import java.util.Map;

/**

* 描述:数据源配置

*

* @Auther: lzx

* @Date: 2019/9/9 15:21

*/

@Configuration

public class DataSourceConfig {

@Autowired

private Database0Config database0Config;

@Autowired

private DatabaseShardingAlgorithm databaseShardingAlgorithm;

@Autowired

private TableShardingAlgorithm tableShardingAlgorithm;

@Bean

public DataSource getDataSource() throws SQLException {

return buildDataSource();

}

private DataSource buildDataSource() throws SQLException {

//分库设置

Map<String, DataSource> dataSourceMap = new HashMap<>(2);

//添加两个数据库database0和database1

dataSourceMap.put(database0Config.getDatabaseName(), database0Config.createDataSource());

//设置默认数据库

DataSourceRule dataSourceRule = new DataSourceRule(dataSourceMap, database0Config.getDatabaseName());

//分表设置,大致思想就是将查询虚拟表Goods根据一定规则映射到真实表中去

TableRule orderTableRule = TableRule.builder("user")

.actualTables(Arrays.asList("user_0", "user_1", "user_2", "user_3", "user_4", "user_5", "user_6", "user_7", "user_8", "user_9"))

.dataSourceRule(dataSourceRule)

.build();

//分库分表策略

ShardingRule shardingRule = ShardingRule.builder()

.dataSourceRule(dataSourceRule)

.tableRules(Arrays.asList(orderTableRule))

.databaseShardingStrategy(new DatabaseShardingStrategy("ID", databaseShardingAlgorithm))

.tableShardingStrategy(new TableShardingStrategy("ID", tableShardingAlgorithm)).build();

DataSource dataSource = ShardingDataSourceFactory.createDataSource(shardingRule);

return dataSource;

}

@Bean

public KeyGenerator keyGenerator() {

return new DefaultKeyGenerator();

}

}

七、开始测试

定义一个实体

package com.lzx.code.codedemo.entity;

import com.fasterxml.jackson.annotation.JsonIgnoreProperties;

import com.fasterxml.jackson.databind.annotation.JsonSerialize;

import com.fasterxml.jackson.databind.ser.std.ToStringSerializer;

import lombok.*;

import org.hibernate.annotations.GenericGenerator;

import javax.persistence.*;

/**

* 描述: 用户

*

* @Auther: lzx

* @Date: 2019/7/11 15:39

*/

@Entity(name = "USER")

@Getter

@Setter

@ToString

@JsonIgnoreProperties(ignoreUnknown = true)

@AllArgsConstructor

@NoArgsConstructor

public class User {

/**

* 主键

*/

@Id

@GeneratedValue(generator = "idUserConfig")

@GenericGenerator(name ="idUserConfig" ,strategy="org.kcsm.common.ids.SerialIdGeneratorSnowflakeId")

@Column(name = "ID", unique = true,nullable=false)

@JsonSerialize(using = ToStringSerializer.class)

private Long id;

/**

* 用户名

*/

@Column(name = "USER_NAME",length = 100)

private String userName;

/**

* 密码

*/

@Column(name = "PASSWORD",length = 100)

private String password;

}

定义实体DAO

package com.lzx.code.codedemo.dao;

import com.lzx.code.codedemo.entity.User;

import org.springframework.data.jpa.repository.JpaRepository;

import org.springframework.data.jpa.repository.JpaSpecificationExecutor;

import org.springframework.data.rest.core.annotation.RepositoryRestResource;

/**

* 描述: 用户dao接口

*

* @Auther: lzx

* @Date: 2019/7/11 15:52

*/

@RepositoryRestResource(path = "user")

public interface UserDao extends JpaRepository<User,Long>,JpaSpecificationExecutor<User> {

}

测试类,插入1000条user数据

package com.lzx.code.codedemo;

import com.lzx.code.codedemo.dao.RolesDao;

import com.lzx.code.codedemo.dao.UserDao;

import com.lzx.code.codedemo.entity.Roles;

import com.lzx.code.codedemo.entity.User;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

@RunWith(SpringRunner.class)

@SpringBootTest

public class CodeDemoApplicationTests {

@Autowired

private UserDao userDao;

@Autowired

private RolesDao rolesDao;

@Test

public void contextLoads() {

User user = null;

Roles roles = null;

for(int i=0;i<1000;i++){

user = new User(

null,

"lzx"+i,

"123456"

);

roles = new Roles(

null,

"角色"+i

);

rolesDao.save(roles);

userDao.save(user);

try {

Thread.sleep(100);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

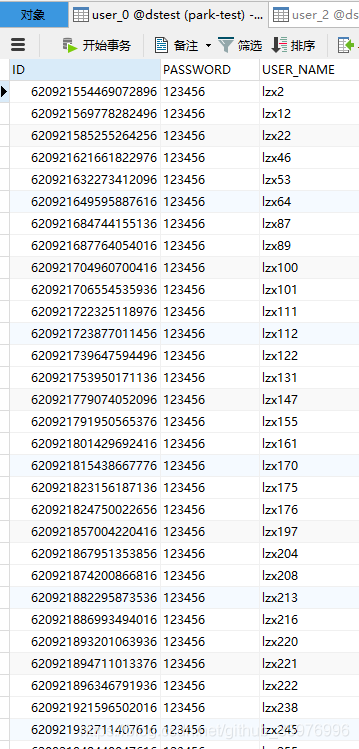

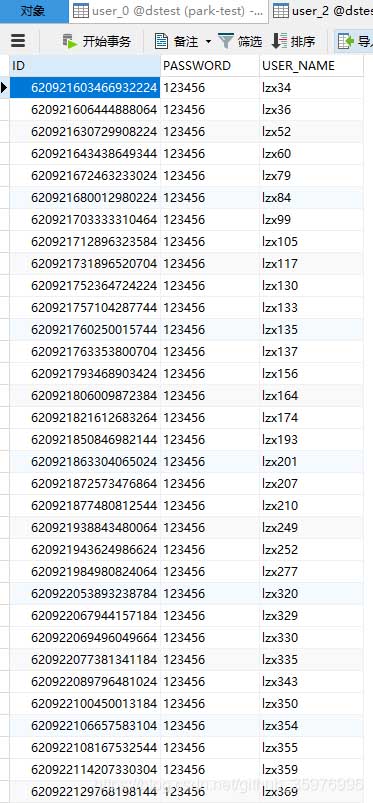

效果:数据被分片存储到0~9的数据表中

以上为个人经验,希望能给大家一个参考,也希望大家多多支持。

加载全部内容