logstash将mysql数据同步到elasticsearch方法详解

funOfFan 人气:0环境说明

- 虚拟机一台

- logstash-7.2.1

- elastic search-7.2.1

- kibana-7.2.1

- MySQL 5.7

- jdk版本为jdk1.8.0_211

mysql安装

删除预装的Mariadb服务

rpm -qa | grep mariadb yum remove mariadb ls /etc/my.cnf ls /var/lib/mysql/ rm -rf /etc/my.cnf rm -rf /var/lib/mysql/

安装MySQL

# 下载MySQL官方repo wget -i -c [http://dev.mysql.com/get/mysql57-community-release-el7-10.noarch.rpm](http://dev.mysql.com/get/mysql57-community-release-el7-10.noarch.rpm) # 如果上述指令报错,可通过浏览器直接访问该网址,自动下载即可 # 安装MySQL官方repo yum -y localinstall mysql57-community-release-el7-10.noarch.rpm # 安装MySQL服务器 yum -y install mysql-community-server

启动MySQL服务

systemctl start mysqld.service #启动mysql服务 systemctl status mysqld.service #查看MySQL服务工作状态

修改MySQL配置

# 在mysqld服务运行日志中,查找初次登陆的默认密码 grep "password" /var/log/mysqld.log # 通过默认初始密码进入数据库 mysql -u root -p #进入数据库之后,修改root用户密码 ALTER USER 'root'@'localhost' IDENTIFIED BY 'BUPTbupt@123'; # 进入mysql数据库,修改root用户配置,以开启远程访问 use mysql; update user set host = '%' where user = 'root'; # 完成上述配置后,退出MySQL

重启MySQL服务

#重启MySQL服务 systemctl restart mysqld.service #并重新登录查看新密码是否生效 #关闭yum自动更新,否则以后每次yum都会自动更新mysqld服务 yum -y remove mysql57-community-release-el7-10.noarch

准备测试数据

use mysql; create table Persons ( PersonID int, LastName varchar(255), FirstName varchar(255), Address varchar(255), City varchar(255) ); insert into Persons values(1, "Bryant", "Kobe", "1st a "1st ave", "Los"); insert into Persons values(2, "James", "LeBron", "1st ave", "Miami"); insert into Persons values(3, "Jordan", "Michale", "1st ave", "DC");

jdbc配置

https://downloads.mysql.com/archives/c-j/下载与当前jdk版本匹配的mysql-connector

解压到指定位置

unzip mysql-connector-java-5.1.4.zip mv mysql-connector-java-5.1.4 /root/logstash-7.2.1/

Elasticsearch安装

官网下载 elasticsearch-7.2.1-linux-x86_64.tar.gz

进入root用户,解压并修改目录属主

tar -zxvf elasticsearch-7.2.1-linux-x86_64.tar.gz -C /usr/local/ cd /usr/local mv elasticsearch-7.2.1/ elasticsearch useradd elasticsearch chown -R elasticsearch:elasticsearch /usr/local/elasticsearch/

修改必要的配置文件

vi /etc/sysctl.conf # 添加以下内容 fs.file-max=655360 vm.max_map_count=262144 vi /etc/security/limits.conf # 添加以下内容 * soft nproc 204800 * hard nproc 204800 * soft nofile 655360 * hard nofile 655360 * soft memlock unlimited * hard memlock unlimited vi /etc/security/limits.d/20-nproc.conf #修改内容如下 * soft nproc 204800

激活上述配置项

sysctl -p #CTRL + D退出当前终端,而后重新登录 ulimit -a #查看内核修改配置是否生效

切换到elasticsearch用户,进行后续配置

su elasticsearch # 创建数据存储目录 mkdir -p /home/elasticsearch/data1/elasticsearch mkdir -p /home/elasticsearch/data2/elasticsearch vi /usr/local/elasticsearch/config/elasticsearch.yml #修改如下内容 cluster.name: elkbigdata node.name: node-1 path.data: /home/elasticsearch/data1/elasticsearch/, /home/elasticsearch/data2/elasticsearch path.logs: /usr/local/elasticsearch/logs bootstrap.memory_lock: true network.host: 0.0.0.0 http.port: 9200 cluster.initial_master_nodes: ["node-1"] xpack.security.enabled: false xpack.security.authc.accept_default_password: false vi /usr/local/elasticsearch/config/jvm.options #修改内容如下 -Xms2g #这个值一般设置为物理内存的一半,不一定非要2g -Xmx2g

关闭防火墙并启动elastic search服务

#退回到root用户 systemctl start firewalld firewall-cmd --add-port=9200/tcp --zone=public --permanent firewall-cmd –reload systemctl stop firewalld su elasticsearch cd /usr/local/elasticsearch/bin/ nohup ./elasticsearch &

验证安装是否成功

curl http://localhost:9200

#若出现下列内容,说明安装成功

{

"name" : "node-1",

"cluster_name" : "elkbigdata",

"cluster_uuid" : "jpkQKXwOTYSr_K7D180c5g",

"version" : {

"number" : "7.2.1",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "fe6cb20",

"build_date" : "2019-07-24T17:58:29.979462Z",

"build_snapshot" : false,

"lucene_version" : "8.0.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

kibana安装

- 官网下载kibana-7.2.1-linux-x86_64.tar.gz

解压并创建state用户

tar -zxvf kibana-7.2.1-linux-x86_64.tar.gz -C /usr/local/ cd /usr/local/ mv kibana-7.2.1-linux-x86_64/ kibana useradd state chown -R state:state kibana/

修改kibana配置文件

vi /usr/local/kibana/config/kibana.yml #打开该文件后,按照下列内容修改配置项 server.port: 5601 server.host: "0.0.0.0" elasticsearch.url: http://es所在虚拟机的IP地址:9200 kibana.index: ".kibana"

放通防火墙并启动服务

systemctl start firewalld firewall-cmd --add-port=5601/tcp --zone=public --permanent firewall-cmd --reload systemctl stop firewalld

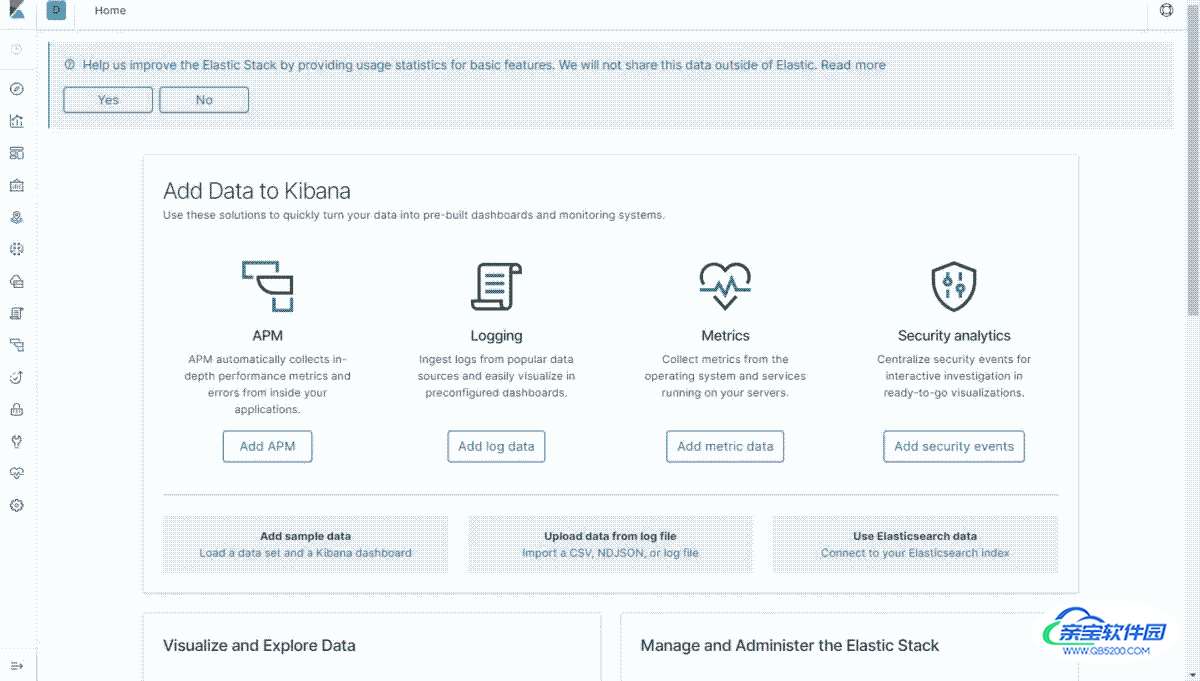

若正常运行,效果如下(运行kibana前,必须保证其连接的elastic search正常工作)

logstash安装

- 官网下载logstash-7.2.1.tar.gz

解压安装

tar -zxvf logstash-7.2.1.tar.gz -C /root cd /root mv logstash-7.2.1/ logstash

- 编写配置文件,使logstash能够从MySQL中读取数据,并写入本机elastic search

vi ~/logstash-7.2.1/config/mylogstash.conf

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

stdin{

}

jdbc{

# 连接的数据库地址和数据库,指定编码格式,禁用ssl协议,设定自动重连

# 此处10.112.103.2为MySQL所在IP地址,也是elastic search所在IP地址

jdbc_connection_string => "jdbc:mysql://10.112.103.2:3306/mysql?characterEncoding=UTF-8&useSSL=FALSE&autoReconnect=true"

#数据库用户名

jdbc_user => "root"

# 数据库用户名对应的密码

jdbc_password => "BUPTbupt@123"

# jar包存放位置

jdbc_driver_library => "/root/logstash-7.2.1/mysql-connector-java-5.1.4/mysql-connector-java-5.1.4-bin.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_default_timezone => "Asia/Shanghai"

jdbc_paging_enabled => "true"

jdbc_page_size => "320000"

lowercase_column_names => false

statement => "select * from Persons"

}

}

output {

elasticsearch {

hosts => ["http://localhost:9200"]

#index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

#此处使用的elastic search并未配置登录用户名以及密码

#user => "elastic"

#password => "changeme"

index => "persons"

document_type => "_doc"

document_id => "%{PersonID}"

}

stdout {

codec => json_lines

}

}

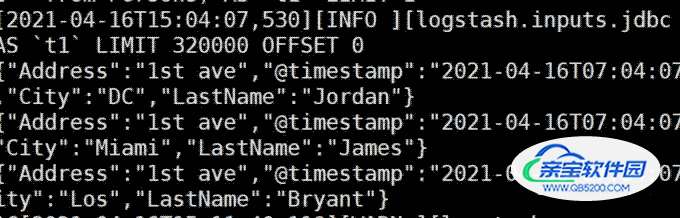

启动logstash同步mysql到es数据中

cd /root/logstash-7.2.1 ./bin/logstash -f config/mylogstash.conf

加载全部内容